Understanding complex scenes from a single camera

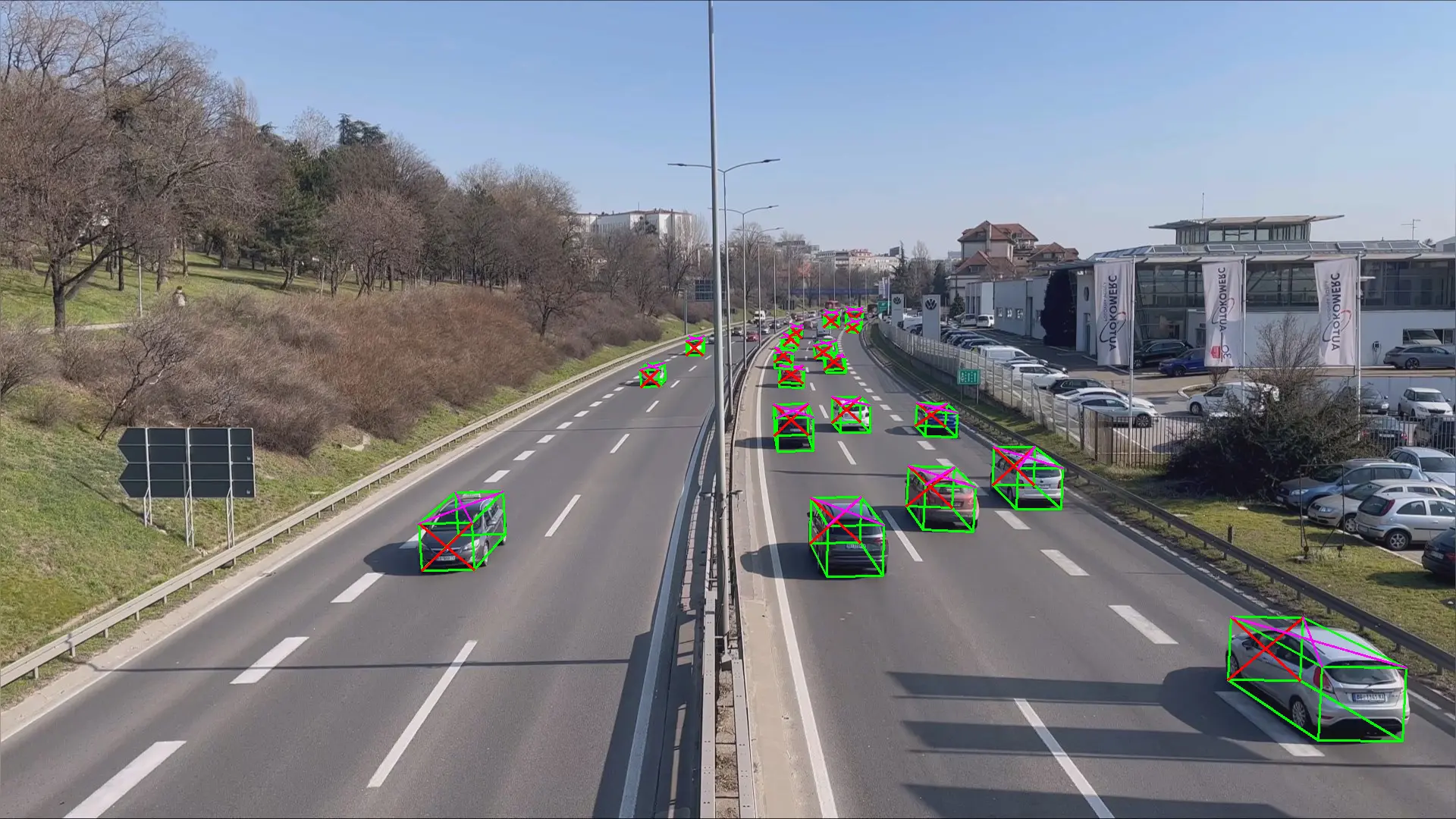

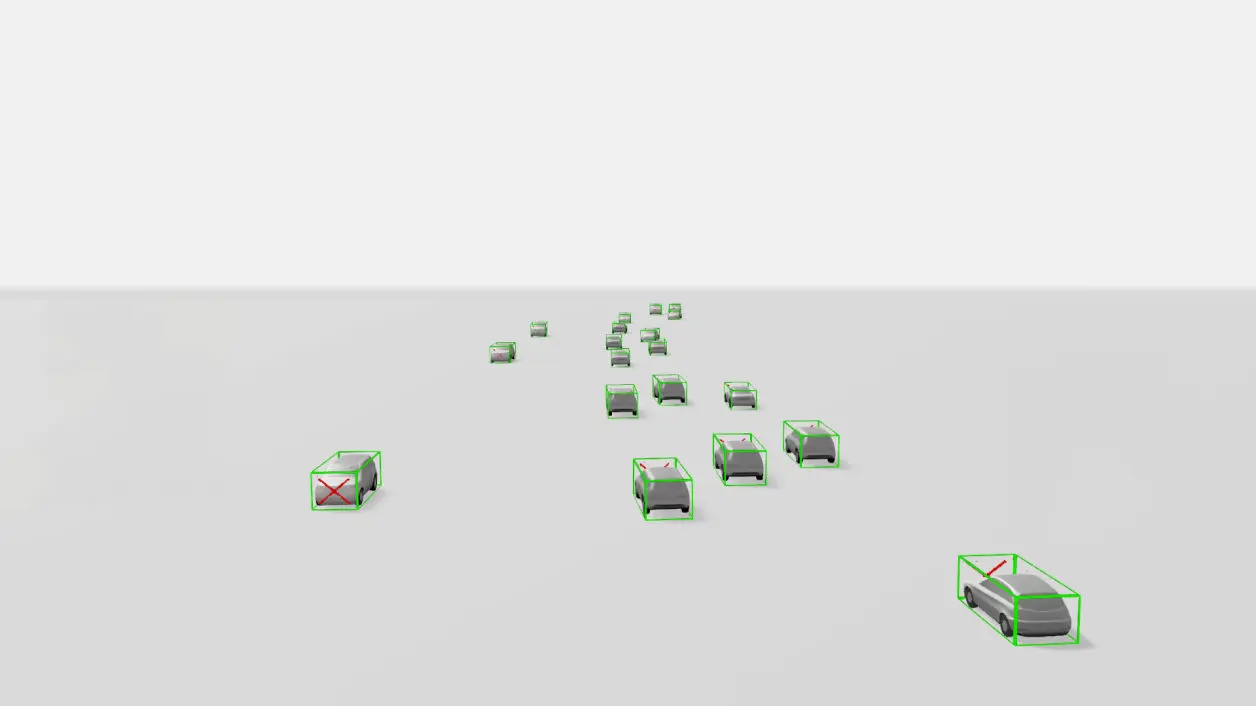

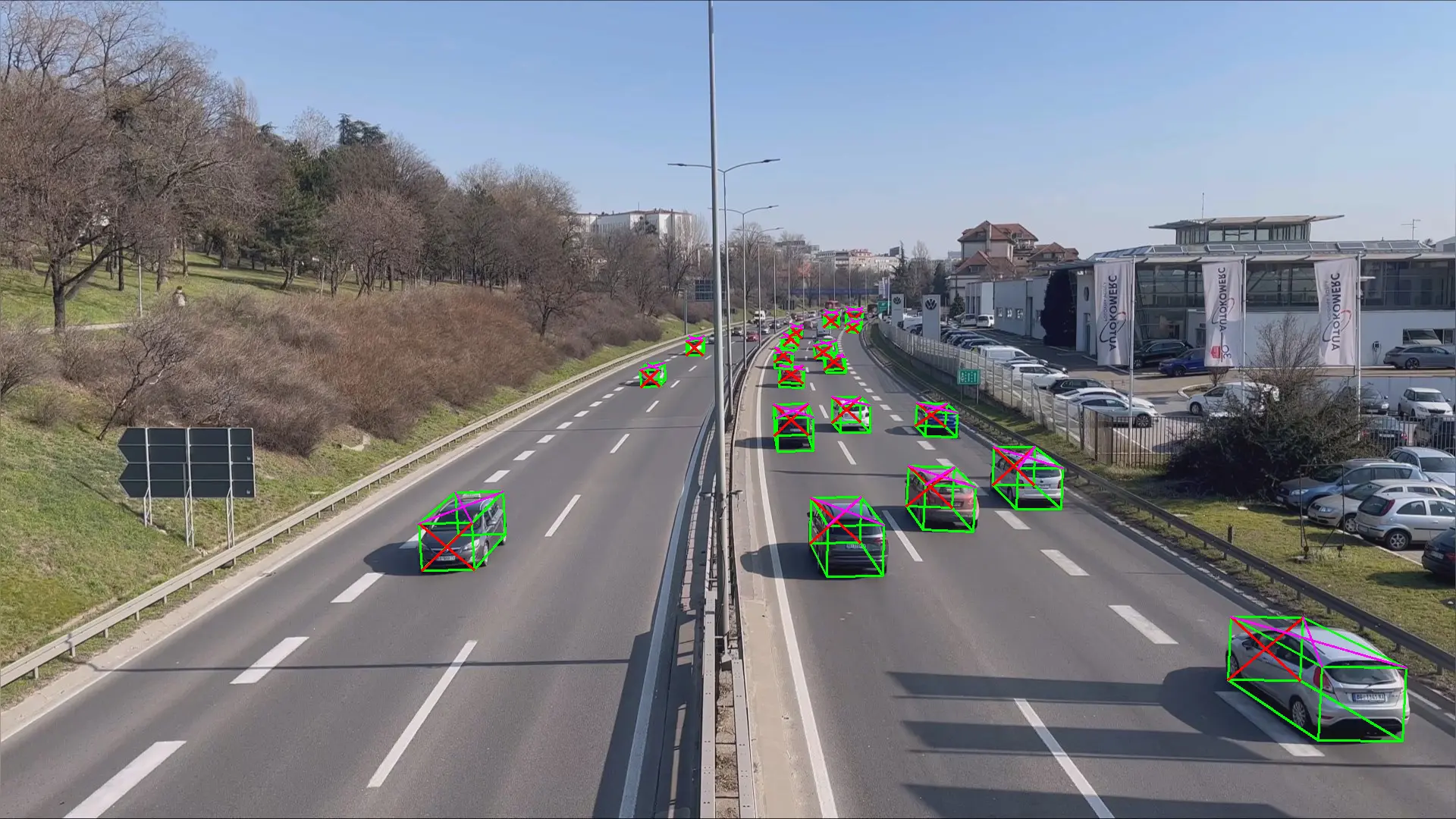

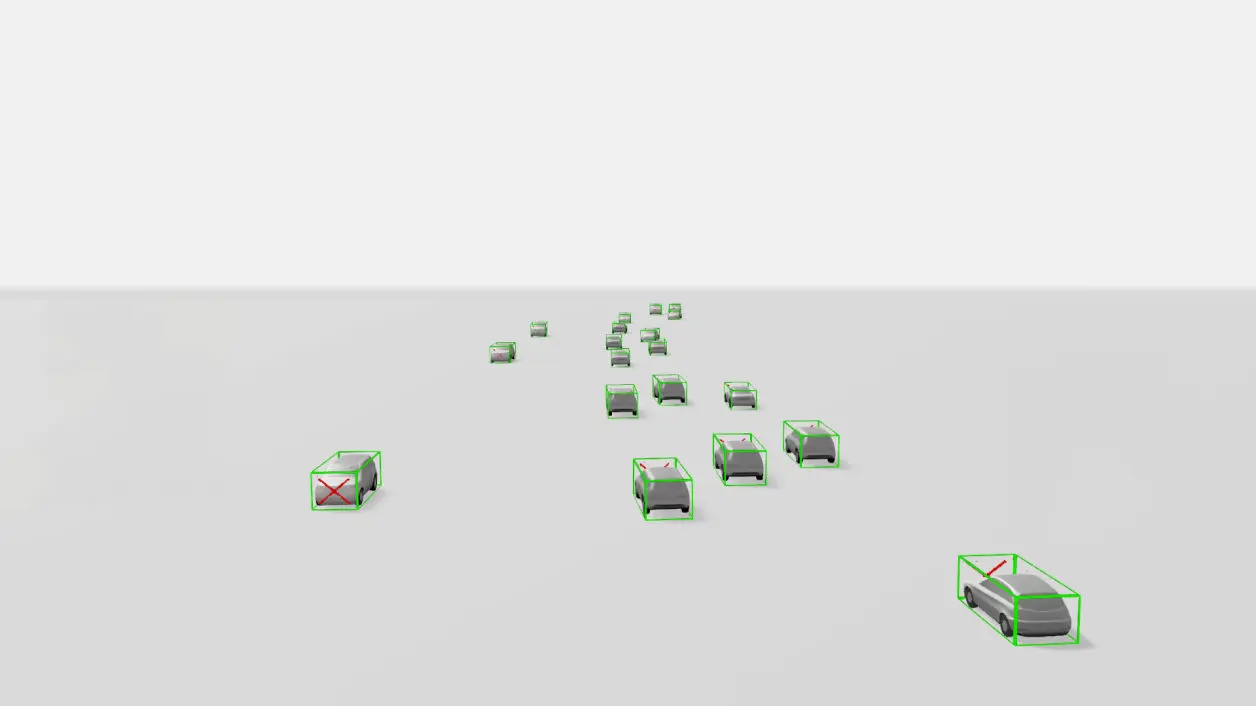

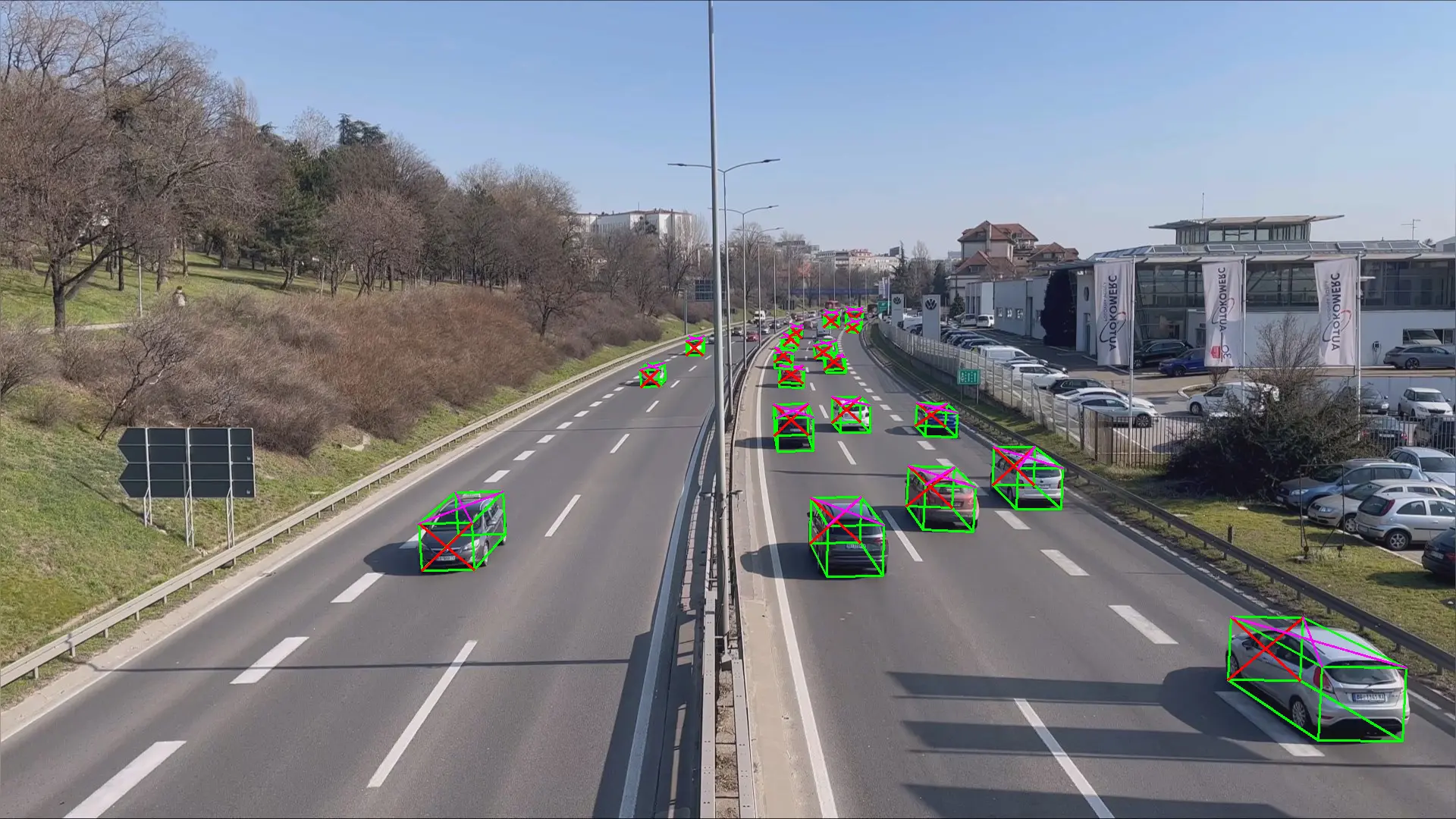

The 3D output shown below is generated from a single 2D image—without LiDAR, stereo cameras, or additional sensors.

Pseudo−LiDAR Detecting Cars

Machine Can See has developed Pseudo-LiDAR—a camera-only technology that transforms standard monocular cameras into real-time 3D perception systems for understanding objects, movement, and space.

We have developed and patented a technology that enables detailed 3D analysis of street and highway traffic using standard cameras.

This makes it possible to extract insights that were previously unavailable without expensive LiDAR systems—supporting planning, optimization, and long-term infrastructure decisions.

Scroll down and DISCOVER how it works.

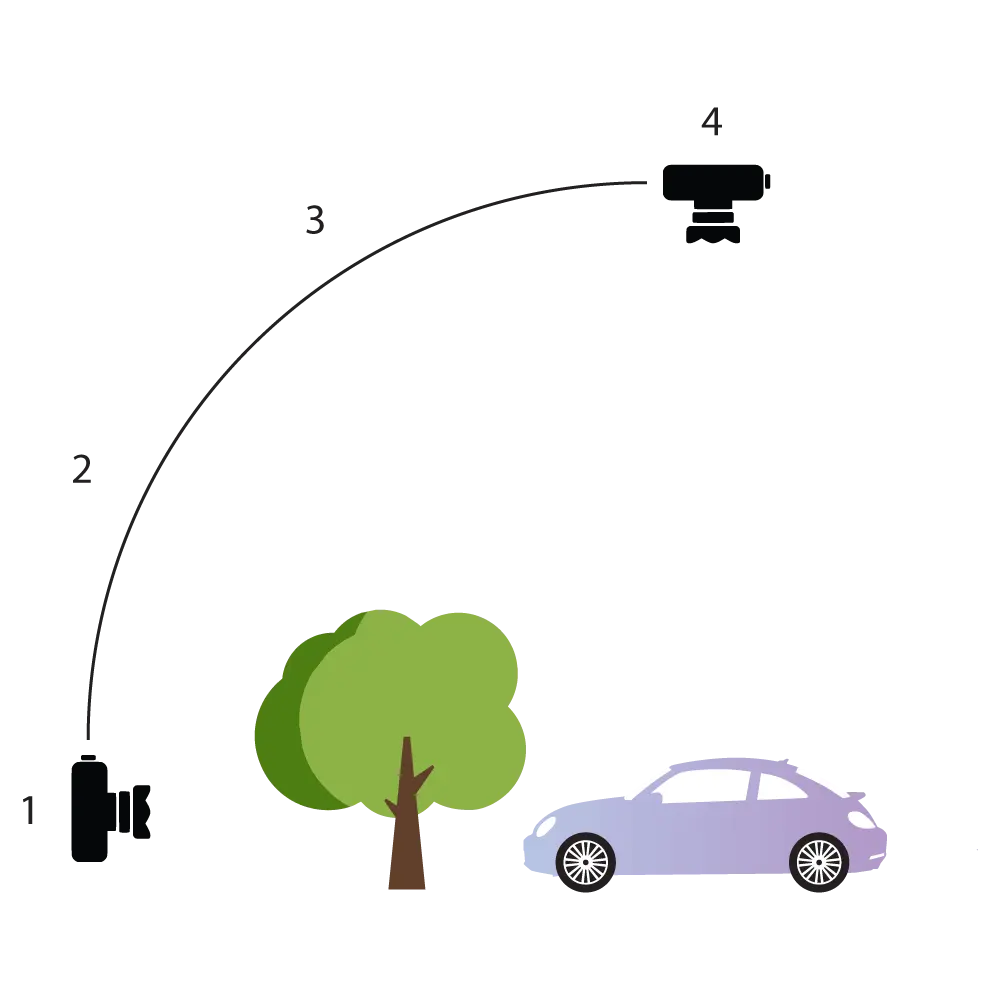

By combining data from multiple cameras viewing the same scene from different angles, the system can track objects even when they are temporarily occluded.

This results in more stable detection, improved tracking accuracy, and a more complete understanding of the environment.

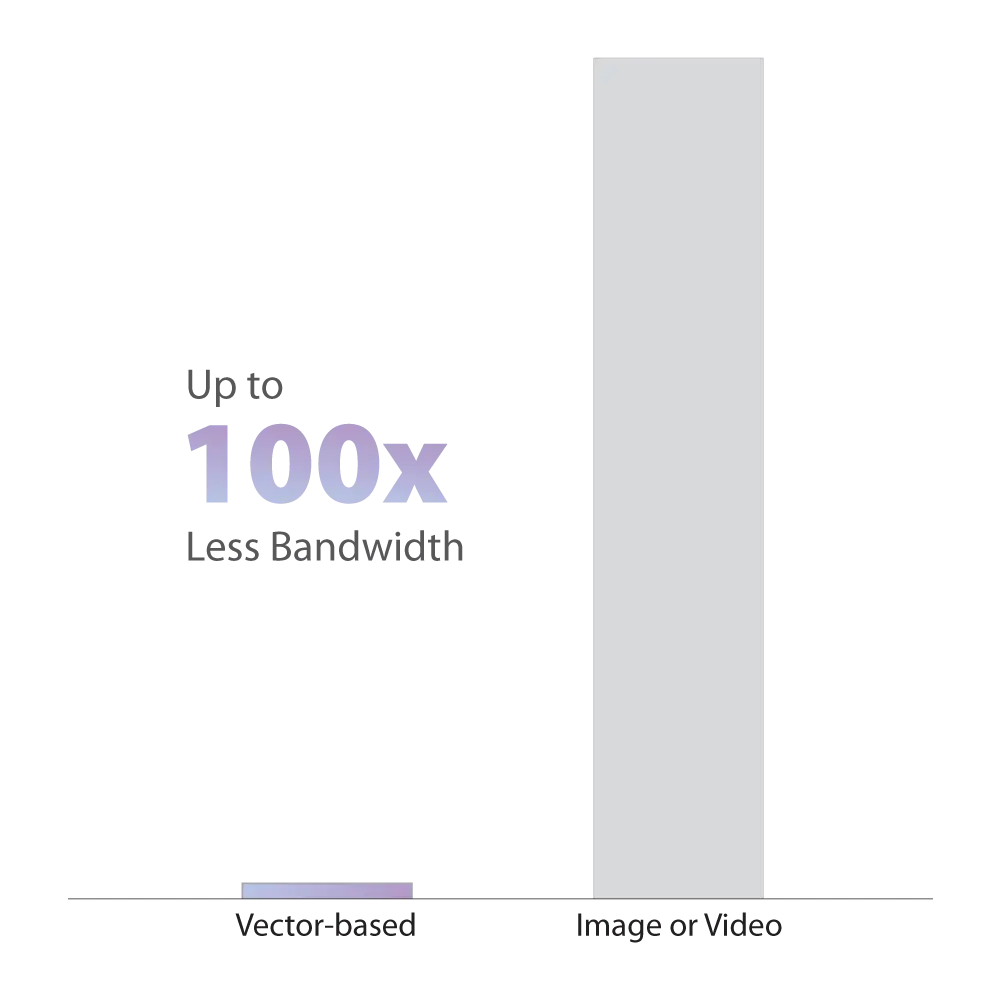

The software runs entirely on the edge, without requiring continuous video streaming to the cloud.

This reduces bandwidth usage by up to 100× compared to traditional video-based systems, enabling reliable operation in locations with limited connectivity.

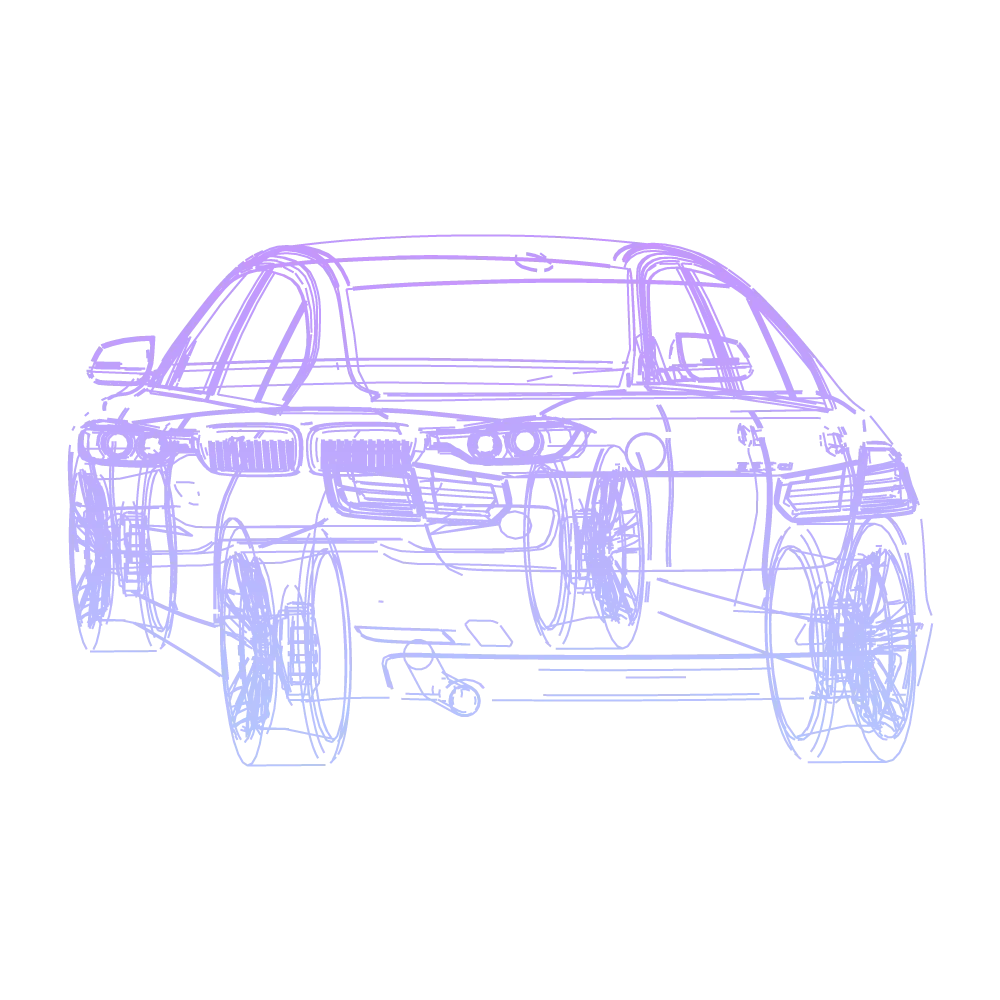

Our system converts visual input into vector data that represents movement and spatial relationships—without retaining identifiable images.

Images can be discarded immediately after analysis, making the technology suitable for public spaces and privacy-sensitive environments.

By combining computer vision with physics-based modeling, the system can understand motion, predict trajectories, and maintain tracking even during temporary occlusions.

This approach delivers more reliable results than purely 2D, image-based methods.

Find out more about our patent →

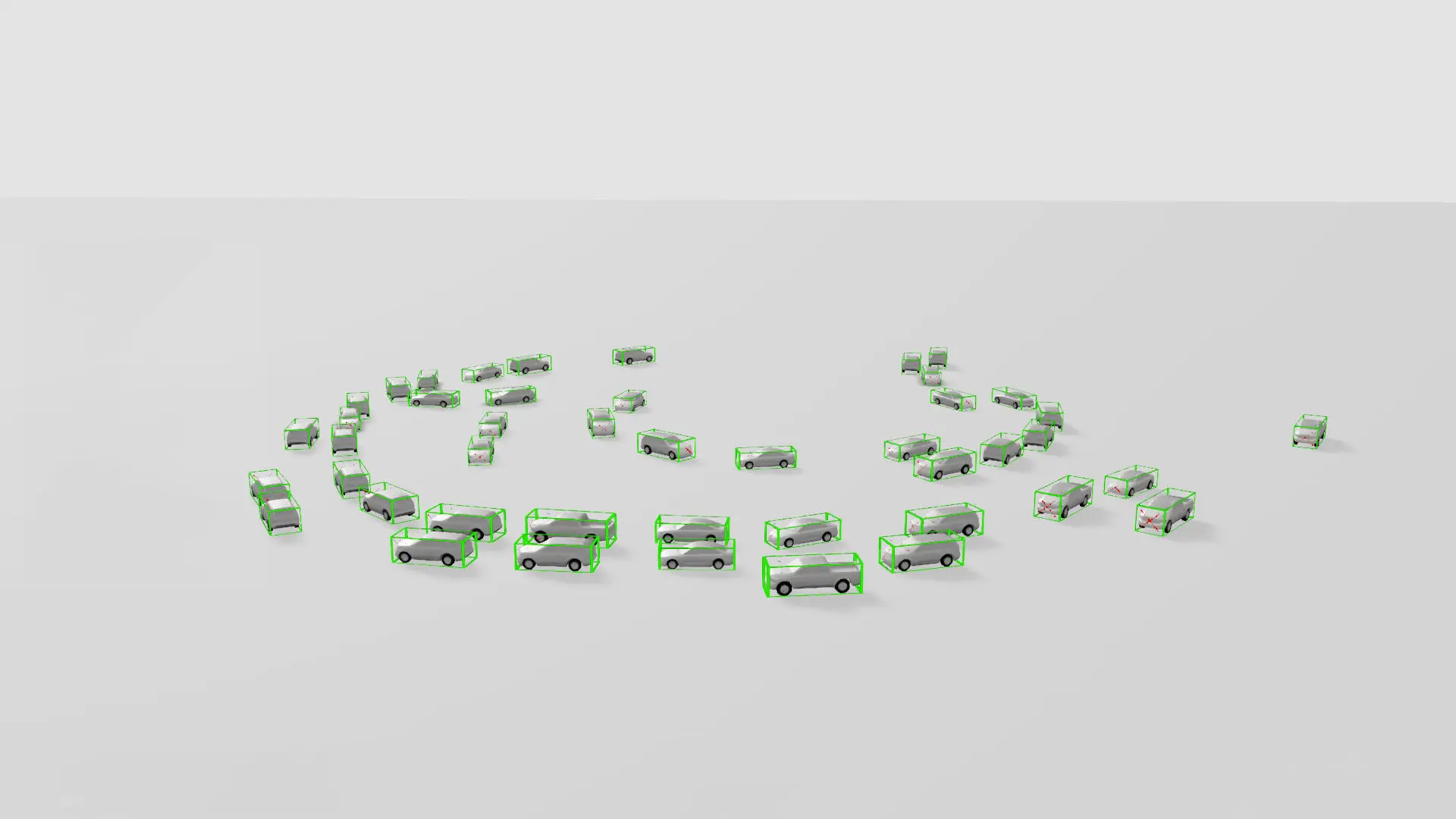

Detailed 3D vector output of a roundabout

Camera-only 3D perception that estimates position, orientation, and motion—enabling more reliable tracking and analytics for traffic and parking.

2D detection lacks depth, so it cannot reliably determine true position, orientation, or distance—especially under occlusion or camera movement.

3D

based perception

2D

only perception

LiDAR is a sensing technology that uses laser pulses to measure distance and generate 3D representations of objects and environments.

It is commonly used in mapping, autonomous systems, and advanced traffic monitoring, but requires specialized and often costly hardware.

Pseudo−LiDAR is a camera-only 3D perception approach that estimates depth, position, and motion using computer vision and AI.

Instead of laser sensors, it relies on standard cameras and edge processing to generate LiDAR-like spatial understanding.

The system infers 3D structure by analyzing visual cues such as motion, geometry, and perspective from standard 2D camera input.

These signals are combined using AI and physics-based models to estimate depth and spatial relationships.

No. The technology is designed to run entirely on the edge.

Only compact vector-based results are produced, without the need for continuous video streaming or cloud-based processing.

Yes. Data from multiple cameras viewing the same area can be fused to improve robustness and handle occlusions.

This provides a more complete and stable understanding of the scene compared to single-camera setups.

Privacy is built into the system by design.

Visual input is converted into vector-based data for scene understanding, and images can be discarded immediately after analysis. No video streams are stored or transmitted.

Edge-first processing and standard security measures help ensure data integrity and controlled access.